Salesforce Data Cloud Setup Guide

The following provides guidance on Salesforce Data Cloud setup required for the "Personalization with CDP" use case.

Data Cloud Provisioning

-

Log in to your Data Cloud instance with the link provided in your admin email and use the credentials provided. If this was deployed in our existing Salesforce instance, please use your current Salesforce Admin password.

-

Click the Setup icon and then

Data Cloud Setup. If you don’t see this option, either refresh your page or log out and back in with your admin user credentials. -

Click

Get Started. Setup can take a few minutes. Your Data Cloud instance is considered as successfully set up if you see a green tick mark for all the steps. If not, correct the issue and finish the setup.

Connect Data

Connectors are specialized data streams that communicate with external sources to transmit data into a Data Cloud data source object. Data Cloud has connectors for: Marketing Cloud Email Studio, MobileConnect, MobilePush, Marketing Cloud Data Extensions, for Salesforce CRM, Ingestion API, Interaction Studio, and for data outside Salesforce via cloud storage providers.

The Personalization with CDP use case uses the following connectors:

-

Salesforce CRM - to connect data from Salesforce CRM instance to Data Cloud

-

Ingestion API - to connect data from external source systems like Snowflake and Azure via MuleSoft’s Salesforce CDP connector.

Salesforce CRM Connector

In Data Cloud, you can establish a connection to your Salesforce CRM org. Follow the below steps to establish a connection.

-

In Data Cloud, select the Setup icon and then Data Cloud Setup.

-

Select

Salesforce CRM. -

To connect a Salesforce org to Data Cloud, click

New. You can connect the Salesforce org that has Data Cloud provisioned, or you can Connect Another Org (external orgs). -

To connect your Salesforce orgs to Data Cloud, click

Connect. If connecting an external Salesforce org, enter your user credentials to establish the connection with Data Cloud. -

After you connect your Salesforce org, you can view the connection details.

-

Connector Name: The name of the Salesforce org that is connected to Data Cloud.

-

Connector Type: Identifies the name of the data connection type.

-

Status: Shows the org’s status.

-

Org Id: The Salesforce org Id connected to Data Cloud.

-

Updated: The date and timestamp of when the Salesforce org was connected to Data Cloud.

-

Your Salesforce org is now connected. With the connection is established, the Data Cloud admin can either use bundles that can automatically deploy data or set up their own data streams.

Ingestion API

You can push data from an external system into Data Cloud via the Ingestion API. This RESTful API offers two interaction patterns: bulk and streaming. The streaming pattern accepts incremental updates to a data set as those changes are captured, while the bulk pattern accepts CSV files in cases where data syncs occur periodically. The same data stream can accept data from the streaming and the bulk interaction.

For the "Personalization with CDP" use case, we are pushing data from external systems Snowflake and Azure through MuleSoft’s Salesforce CDP connector into Data Cloud via the Ingestion API.

Follow steps in each section below to setup and configure ingestion API to push data from external systems.

Set Up an Ingestion API Connector

-

In Data Cloud, select

Data Cloud Setup. -

Click

Ingestion API. -

Click

New, enter a name for the API source, then clickSave. On the details page for the new connector, you must upload a schema file in OpenAPI (OAS) format with the .yaml file extension. The schema file describes how data from your website is structured.

| Ingestion API schemas have set requirements. Review the schema requirements before ingestion. |

-

Click Upload Schema and navigate to the location of the file you want to use. Select the file and click

Open. -

Preview all the detected objects and their attributes in your schema.

-

Click

Save. The connector page reflects the updated status. -

After the schema file is uploaded, you can create data streams to begin sending data from your source system.

| For the "Personalization with CDP" use case, we have added schema for the following objects. * Loyalty * Subscription * WebEngagement * EmailEngagement |

The schema used for the "Personalization with CDP" use case can be found in the implementation template.

Schema Requirements

To create an ingestion API source in Data Cloud, the schema file you upload must meet specific requirements:

-

Uploaded schemas have to be in valid OpenAPI format with a .yml or .yaml extension. OpenAPI version 3 is supported (3.0.0, 3.0.1, 3.0.2).

-

Objects cannot have nested objects.

-

Each schema must have at least one object. Each object must have at least one field.

-

Objects cannot have more than 1000 fields.

-

Objects cannot be longer than 80 characters.

-

Object names must contain only

a-z, A-Z, 0-9, _, -. No unicode characters. -

Field names must contain only

a-z, A-Z, 0-9, _, -. No unicode characters. -

Field names cannot be any of these reserved words: date_id, location_id, dat_account_currency, dat_exchange_rate, pacing_period, pacing_end_date, rowcount, version.

-

Field names cannot contain the string

__. -

Field names cannot exceed 80 characters.

-

Fields meet the following type and format:

-

For text or boolean type: string

-

For number type: number

-

For date type: string; format: date-string

-

-

Object names cannot be duplicated; case-insensitive.

-

Objects cannot have duplicate field names; case-insensitive.

-

Date strings in your object payloads must be in ISO 8601 UTC Zulu with format

yyyy-MM-dd’T’HH:mm:ss.SSS’Z.

When updating your schema, be aware:

-

Existing field data types cannot be changed.

-

Upon updating an object, all the existing fields for that object must be present.

-

Your updated schema file only includes changed objects, so you don’t have to provide a comprehensive list of objects each time.

-

A date field must be present for objects that are intended for profile or engagement category. Objects of type

otherdo not impose the same requirement.

Refer to this link for an example schema.

Create a Data Stream

Data streams are the connections and associated data ingested into Data Cloud. Data Cloud includes many data streams that can operate on different refresh schedules. Check Data Stream Schedule in Data Cloud to know about how and when these data streams update.

Create a Data Stream Using Salesforce CRM Starter Bundle

Create a data stream using a starter bundle to begin the flow of data from a Salesforce CRM source. Note: You can configure only one starter bundle at a time. For more details on Salesforce CRM Starter Data Bundles, see Salesforce CRM Starter Data Bundles.

For the "Personalization with CDP" use case, we have created data streams using the Service Cloud starter bundle.

-

Navigate to the Data Streams tab.

-

Click New.

-

Select the Salesforce CRM data source and click Next.

-

Select the Salesforce org from which your data resides. If you have only one org connected to Data Cloud, it’s selected by default.

-

The Data Bundles tab is selected by default. Choose a starter bundle (Sales Cloud, Service Cloud, or Loyalty Management).

| The Loyalty Management bundle is only accessible when object permissions have been set in Data Cloud for your Loyalty Management objects. |

-

Review the list of Salesforce objects and their fields to be ingested. You can deselect any non required field not necessary for your data stream and click Next.

-

Review the list of objects and their fields and click Next.

-

Review the list of data streams that Data Cloud is going to create and click Deploy.

-

Click one of the newly created data streams to review the field list.

-

Click Review Mappings.

-

Review how Data Source Objects are mapped to Data Model Objects.

Create a Salesforce CRM Data Stream

Create a data stream to begin the flow of data from a Salesforce CRM data source. Add additional permissions to your Data Cloud Salesforce Connector Integration permission set in your Salesforce CRM org to ingest standard and custom objects and fields into Data Cloud.

| If you are prompted with an error stating those objects cannot be added, you might need to Enable Object and Field Permissions to Access Salesforce CRM in Data Cloud (see below for further details). |

For the "Personalization with CDP" use case, we have created data streams for Salesforce CRM objects Orders and Products after enabling permissions to ingest data.

To add permissions for objects and their fields:

-

In the Salesforce org containing the objects and fields you want to ingest into Data Cloud, from Setup in the Quick Find box, enter "Permission", and select Permission Sets.

-

Select the Data Cloud Salesforce Connector Integration permission set.

| The permission set is available only after you connect your CRM org to Data Cloud. |

-

From Apps, select Object Settings.

-

Select the object to ingest into Data Cloud.

-

To change object permissions, click Edit.

-

Enable Read and View All permissions for the object and Read Access for each field.

-

Click Save.

Repeat these steps for all objects and fields you want to ingest into Data Cloud.

To create data streams from Salesforce CRM data source:

-

In Data Cloud, navigate to Data Streams.

-

Click New.

-

Select the Salesforce CRM data source and click Next.

-

To create your data stream, select a Salesforce org.If you have only one Salesforce org connected to Data Cloud, it’s selected by default.

-

Select the All Objects tab and click Next.

-

Review the fields to include in your data stream. All fields are preselected by default. The number of fields available for the object is shown in parentheses.

-

Deselect any of the fields not required for your data stream in the Header Label.

-

If needed, add these formula fields and then click Next:

-

Field Label: The display name for a data stream field.

-

Field API Name: The programmatic reference for a data stream field.

-

Formula Return Type: The data type corresponding to the newly derived field. Options include Number, Text, and Date.

-

-

Fill in deployment details.

-

Data Stream Name: Defaults to Object Label and Salesforce org ID, but can be edited.

-

Ongoing Refresh Settings: Frequency and timing of new data retrieval. The Frequency is hourly and is set automatically.

-

-

Click Deploy. Your Salesforce CRM data stream is now created.

To create more data streams, repeat steps 6 through 10.

Create an Ingestion API Data Stream

After uploading the schema file, create a data stream from your source objects.

-

In Data Cloud, select Data Streams.

-

In recently viewed data streams, click New.

-

Click Ingestion API.

-

If you’ve more than one Ingestion API configured, select the one you want from the dropdown.

-

Check the objects found in the schema you want to use and click Next.

-

In the New Data Stream dialog box, configure the following:

-

Primary Key: A true Primary Key needs to be leveraged for Data Cloud. If one does not exist, you will need to create a Formula Filed for the Primay Key.

-

Category: Choose between Profile and Engagement.

Note: For the "Personalization with CDP use case", the category for all the objects in the schema areProfile. -

Record Modified Date: To order Profile modifications, use the Record Modified Date.

Note: A record modified field that indicates when each incoming record was last modified is required for Engagement object types. While the field requirement is optional for Profile and Other objects, we encourage you to provide the record modified field to ensure incoming records are processed in the right order. -

Date Time Field: Used to represent when Engagement from an external source occurred at ingestion.

-

-

Click Next.

-

On the final summary screen, review the list of data streams that Data Cloud created.

-

Click Deploy. If you’ve only created one data stream, the data stream’s record page appears. If you’ve created multiple data streams, the view refreshes to show all recently viewed data streams.

-

Map the data for the data stream before use. Wait up to one hour for your data to appear in your data stream.

Create a Connected App for Data Cloud Ingestion API

Before you can send data into Data Cloud using Ingestion API via MuleSoft’s Salesforce CDP connector, you must configure a Connected App. Refer this link for more details on creating a connected app.

As part of your Connected App set up for Ingestion API, you must select the following OAuth scope:

-

Access and manage your Data Cloud Ingestion API data (

cdp_ingest_api) -

Manage Data Cloud profile data (

cdp_profile_api) -

Perform ANSI SQL queries on Data Cloud data (

cdp_query_api) -

Manage user data via APIs (

api) -

Perform requests on your behalf at any time (

refresh_token,offline_access).

Configure MuleSoft’s Salesforce CDP Connector

Anypoint Connector for Salesforce CDP (Data Cloud Connector) provides customers a pipeline to send data into Data Cloud.

This connector works with the Data Cloud Bulk and Streaming API, depending on the operation you configure. Each API call uses a request/response pattern over an HTTPS connection. All required request headers, error handling, and HTTPS connection configurations are built into the connector.

Refer to this link for details on configuration and operations for Data Cloud Connector.

For the "Personalization with CDP" use case, refer CDP System API specification and implementation template.

Data Modeling and Data Mapping

Data Cleansing and Preparation

Cleaning and preparing your data is critical for success in using Data Cloud’s segmentation and activation capabilities.

-

Formula Expression Library - When you create a Data Cloud data stream, you can choose to generate more fields. These supplemental fields can be hard-coded or derived from other fields in the data stream.

-

Formula Expression Use Cases - These use cases are examples of using formula expression functionality in Data Cloud.

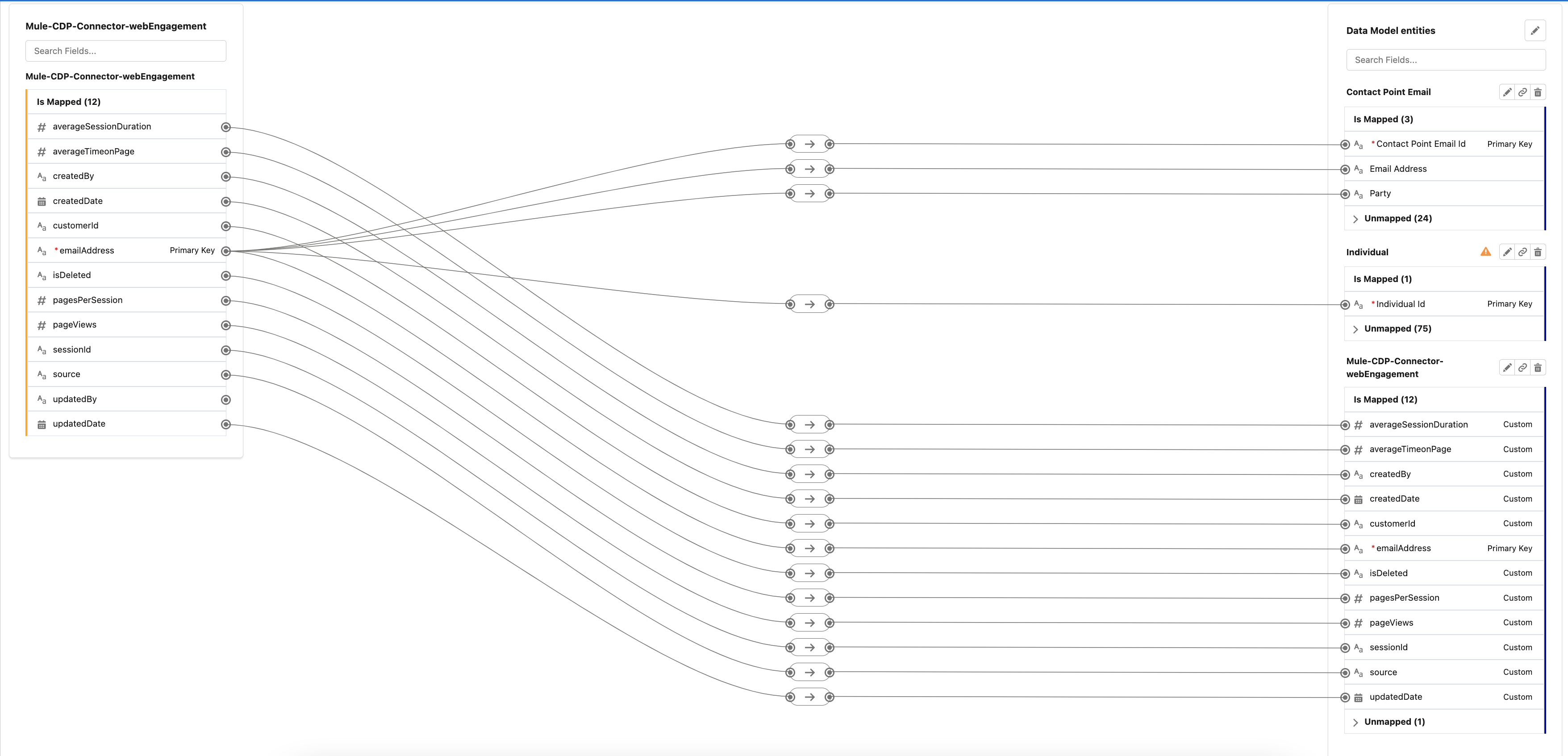

Data Mapping

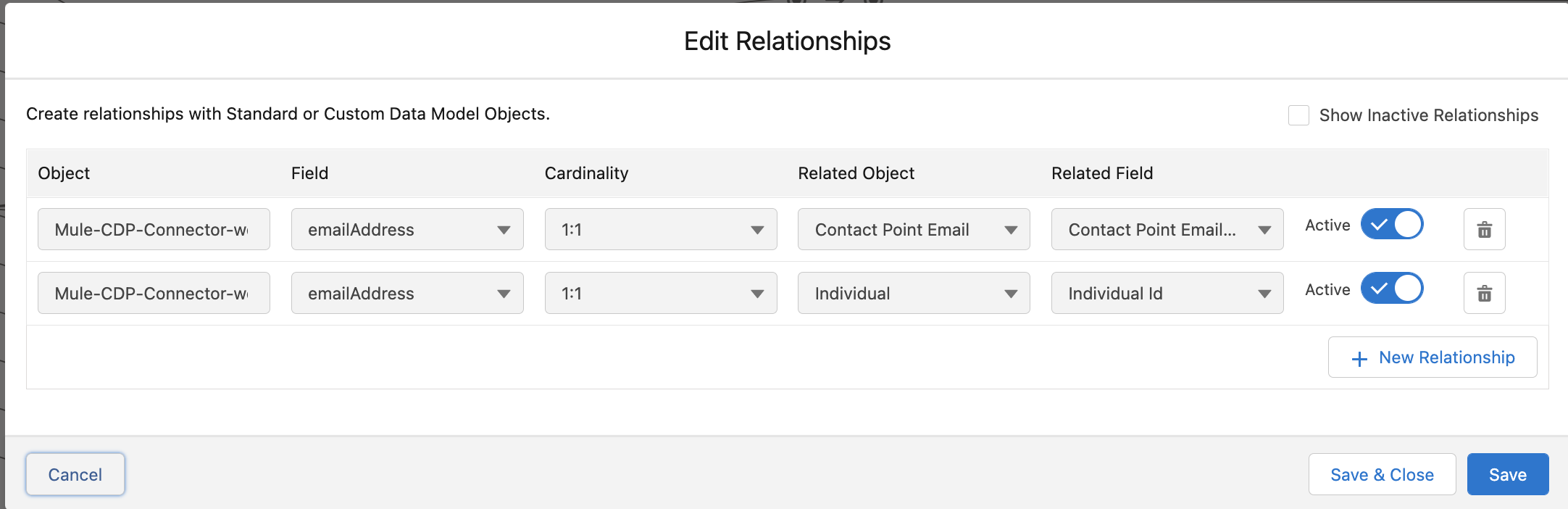

After creating your data streams, you must associate your data source objects (DSOs) to data model objects (DMOs). Only mapped fields and objects with relationships can be used for Segmentation and Activation.

On the Data Stream detail page or after deploying your data streams, click Start Data Mapping.

On the Data Streams mapping canvas, you can see both your DSOs and target DMOs. To map one to another, click the name of a DSO and connect it to the desired DMO. For example, you can map the DSO firstname to the target First Name field using this method.

-

Data Mapper Views - Select table view or visual view when mapping your data in Data Cloud.

-

Data Model Objects - Objects in the data model created by the customer for Data Cloud implementation are called Data Model Objects. If a new object is created, it can use a reference object. If a Data Model Object uses a reference object, it inherits the name, shape, and semantics of the reference object. This Data Model Object is called a Standard Object. You can also choose to define an entirely custom Data Model Object, called a Custom Object.

-

Required Data Mappings - When mapping your party area data, complete the required fields and relationships to successfully use Identity Resolution, Segmentation, and Activation.

For the "Personalization with CDP" use case, we mapped to Custom DMO for our MuleSoft Web Engagement Data.

Identity Resolution

Use Identity Resolution to match and reconcile data about people into a comprehensive view of your customer called a unified profile. Identity Resolution uses matching and reconciliation rulesets to link the most relevant data from all the associated profiles of each unified profile. Identity Resolution is powered by rulesets to create unified profiles in Data Cloud.

Access Identity Resolution from Data Cloud after mapping entities to the CIM. Entities must be mapped before you can create rulesets. Additional Information can be found here.

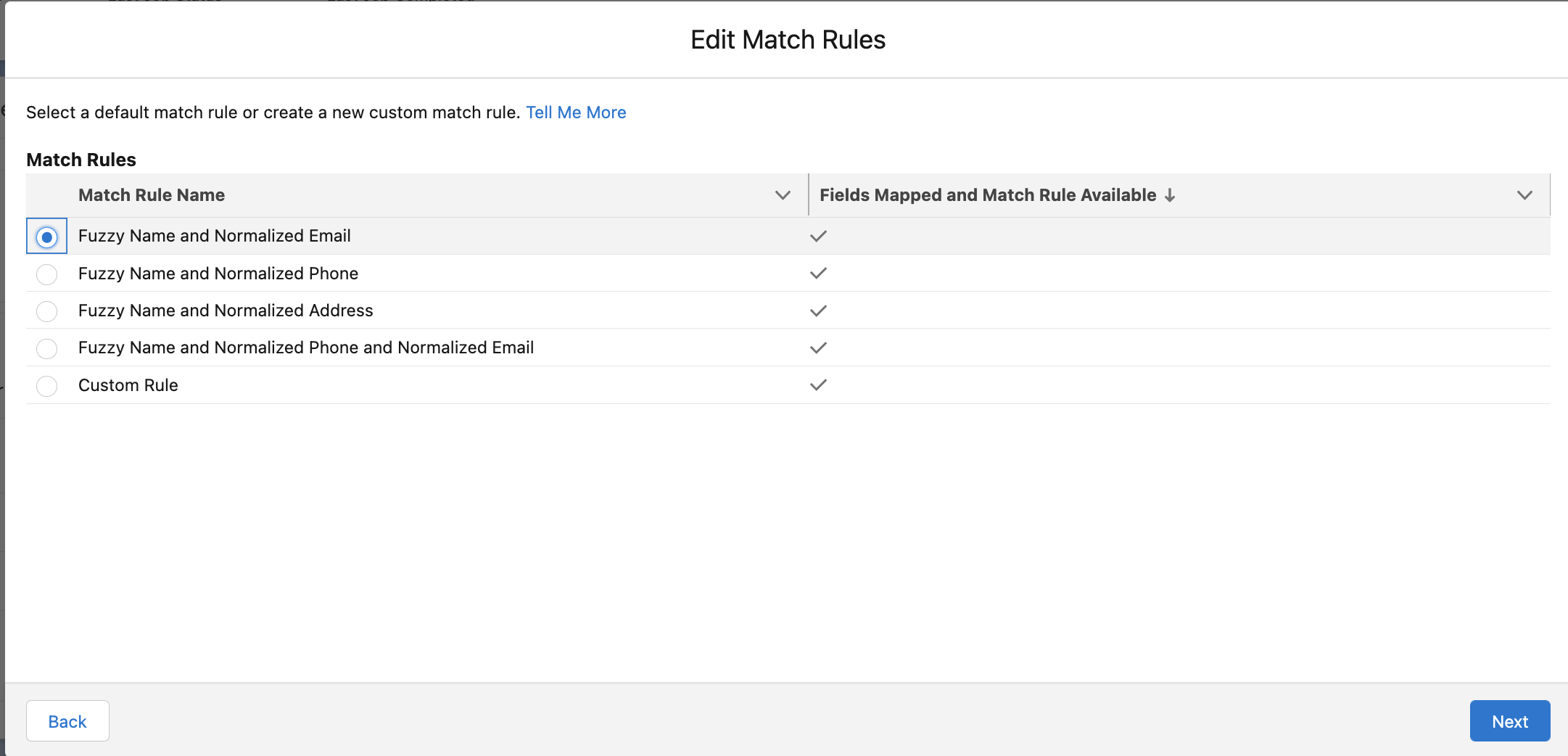

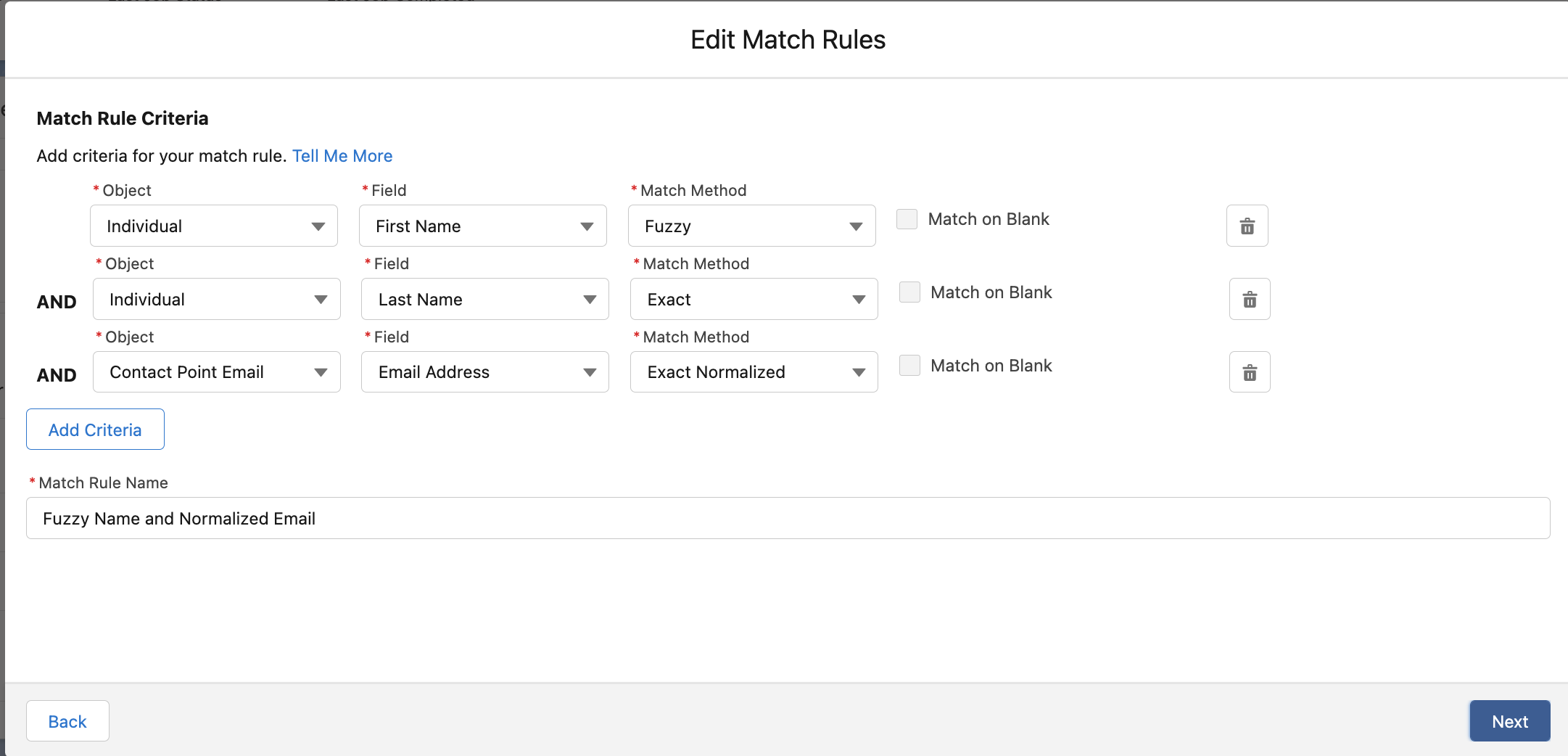

For the Personalization with CDP use case, we are leveraging the Fuzzy Name and Normalized Email Match Rule; leveraging Fuzzy First Name, Exact Last Name, and Normalized Email Address.

To create your Identity Resolution Rules, follow the steps below:

-

Go to the Identity Resolution tab in the main menu.

-

Click New in the upper right corner.

-

Select Individual from the dropdown for the Entity. Do not add a Ruleset ID for your Primary Ruleset.

-

Create a Ruleset Name. If you are using more than one ruleset for testing, having the name reference the rules included will help differentiate the rulesets.

-

Add a Rule Description (optional).

-

Click Save to save the ruleset.

-

Click the Configure button to configure your Match Rules.

-

Click the Configure button next to Match Rule 1 to configure your Match Rules.

-

Add the desired Match Rules.

-

Click the Next button and add the desired criteria for your Match Rules.

-

Click the Next button. Click Add Match Rule to add any additional rules, or click Save to complete Match Rules.

Once run, review the Identity Resolution Summary and Processing History screens to validate your Identity Resolution Rules. Add applicable Individual Reconciliation Rules.

Create and Activate Segments

Segmentation

Creating segments is simple in Data Cloud.

-

In Data Cloud, click Segments.

-

When you see the list of already created segments, if any, click New.

-

Fill in all desired fields under Segment Details. The fields Segment On, Segment Name, and Publish Schedule are required.

-

Segment On: Identifies the entity that your segment builds on.

-

Segment Name: Give your Segment a unique name that’s easy to remember and recognize.

-

Segment Description: Provide detail about a segment’s use, contents, or timeframes for later review.

-

Publish Schedule: Determines when and how often your segment publishes to activation targets.

-

-

Save your changes.

| Leave the Publish Schedule as "Don’t Refresh for now," and then fill it in after you complete your segment filters. Segment can be scheduled to publish every 12 or 24 hours. |

Segment On: Segment On defines the target entity (object) used to build your segment. For example, you can build a segment on Unified Individual or Account. You can choose any entity marked as type Profile during ingestion.

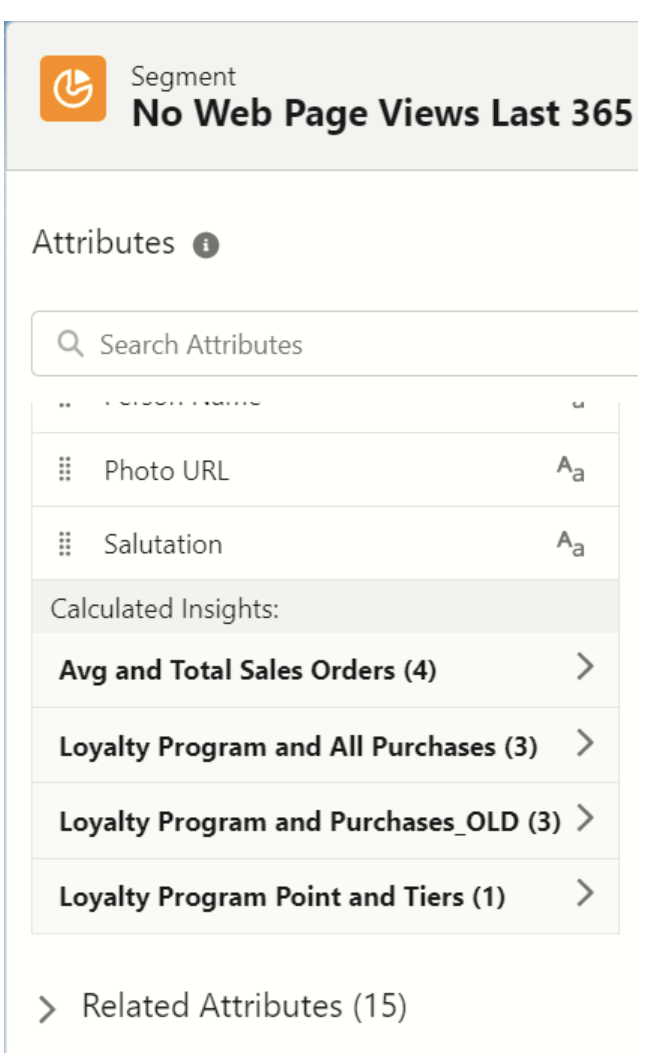

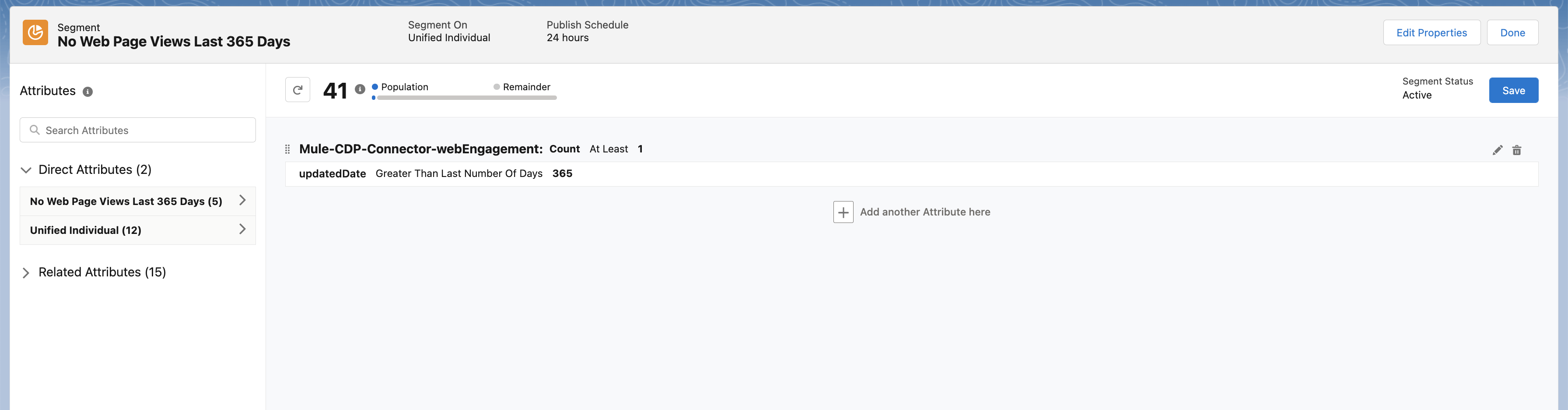

For the "Personalization with CDP" use case, we have created a few Segments. All Segments that we have created have been segmented on Unified Individual. For this segment, we wanted to create an audience wherein there were no Web Page Views in the last 365 Days. We grabbed updated Date from our Attribute Library and dragged it to the canvas. And for the operator we selected "Greater Than Last Number of Days" and entered "365" for the number of days. In the Publish Schedule field, we select a schedule of every 24 hours.

Activation Targets

Create activation targets, build, and activate data segments with Data Cloud.

For the "Personalization with CDP" use case, we have created Cloud File Storage (S3) Activation Target and Marketing Cloud Activation Target.

Activation Target - Cloud File Storage (S3)

Create an activation target in Data Cloud to publish segments to Cloud Storage. You can activate S3 without mapping contact points. Before you can create an Activation Target, determine your S3 access key and secret key.

-

Click Activation Targets.

-

Click New Activation Target.

-

Select Cloud File Storage.

-

Click Next.

-

Enter an easy to recognize and unique name.

-

Click Next.

-

Enter the S3 bucket and parent folder configured by your admin for your activation target.

-

To give access to your S3 location, enter your S3 access key and secret key. The S3 credentials provided must have the following permissions: s3:PutObject, s3:GetObject, s3:ListBucket, s3:DeleteObject, s3:GetBucketLocation. NOTE: To delete S3 access or secret keys, delete the activation target.

-

Select an export file format.

-

Click Save.

Your Cloud File Storage activation target is created and items are added to Cloud Storage.

-

A metadata file that describes the segment definition.

-

Data files that contain the segment members with additional attributes.

-

A segment-data folder to indicate that writing output files to the folder has completed. If this file is missing, it indicates that either the files are being written or the data was only partially written and the producer failed.

After you create and activate segments to Cloud File Storage, a subfolder called Salesforce-c360-Segments is automatically created when the first segment is activated to Cloud File Storage.

-

Access Cloud File Storage.

-

Navigate to the bucket name you configured in Cloud File Storage Activation Target.

-

Navigate to

/first_party/Salesforce_c360_Segments`. Segments are created inYYYY/MM/DD/HH/{first 100 characters of segment name}__{20 characters of activation name}_{timestamp in yyyyMMddHHmmsssSSS format}.

Activation Target - Marketing Cloud

Before creating an activation target, configure the Marketing Cloud connector in the Data Cloud Setup page.

-

Click Setup gear icon and then Data Cloud Setup.

-

Select Marketing Cloud.

-

Enter Credentials to authenticate your Marketing Cloud account. You can proceed with the next step in the setup only if the authentication is successful.

-

Complete the Data Source setup step if you are planning to ingest data from Marketing Cloud into Data Cloud. Otherwise, this step is optional.

| For "Personalization with CDP use case", this step is skipped. |

-

Select Business Units to activate - this step is optional. To add or remove business units (BU), click the arrows between the two columns.

| For "Personalization with CDP" use case, we have selected business units to publish segments to Marketing Cloud. |

Next, create an activation target in Data Cloud to publish segments to Marketing Cloud business units.

-

Click Activation Targets.

-

Click New.

-

Select Marketing Cloud.

-

Click Next.

-

Enter an easy to recognize and unique name.

Marketing Cloud activation target names cannot be more than 128 characters, start with an underscore, be all numbers, or include these characters: @ %^ = < ' * + # $ / \ ! ? ( ) { } [ ] , . \ \

|

-

Click Next.

-

To add or remove business units (BU) to receive the published segments, click the arrows between the two columns. When an activation target has multiple BUs, the activation filters the contacts by the BUs. The segment activates as a Shared Data Extension (SDE) and not as a Data Extension (DE) to Marketing Cloud. If an activation target has multiple business units configured, modify the activation target configuration to include one business unit only.

-

Save your changes.

Your Marketing Cloud activation target is created.

Activation

Activation is the process that materializes and publishes a segment to activation platforms. An activation target is used to store authentication and authorization information for a given activation platform. You can publish your segments, include contact points, and additional attributes to the activation targets.

View, change, and delete your Activations in Data Cloud for publishing of segments to activation platforms. Navigate to an Activation record to view details and publish history for that Activation.

In Activations, the Activation History shows when and how segments were published. For segments published to a Marketing Cloud activation target, additional Accepted and Rejected columns only appear in Activation Publish History to provide more details.

To view the publish history of a segment:

-

In Data Cloud, navigate to your Activations.

-

Select the activation to review.

-

View details in Activation History.

To create Activation for a Segment:

After you create a segment in Data Cloud, you can publish a segment to an activation target.

-

In Data Cloud, click Segments.

-

Select a segment.

-

In Activations, click New.

-

Select an Activation Target.

-

Select an entity from Activation Membership.

-

Click Next.

-

Select your contact points. Note: Selecting contact points is optional for S3 activations.

-

When contact points are mapped, select an existing path or click Edit to add, reorder, or delete sources and change source types and priority for each contact point. The Source Type Marketing Cloud option is selected by default.

-

For Marketing Cloud Activations, modify activations so that the source priority order is Marketing Cloud, and remove Any Source and Any Type, so new contacts won’t get introduced to Marketing Cloud from other sources.If an activation source priority has Any Source and Any Type configured, the activation will introduce contacts from other business units into the business unit configured for the activation target. If an activation source priority has other sources configured, activation introduces new contacts in Marketing Cloud.

-

-

To activate additional attributes, click Add Attributes.

-

Drag up to 100 additional attributes to the canvas and click Save.

The following two types of additional attributes can be added to your activation:-

Attributes of the Activation Membership entity.

-

Attributes from entities mapped with a direct relationship to the Activation Membership entity.

-

-

Click to add a unique preferred attribute name for any attributes.

-

-

Click Next.

-

Enter a name and description for your activation. The following characters cannot be included in the Name field:

+ ! @ # $ % ^ * ( ) = { } [ ] \ . < > / " : ? | , _ & -

Click Save.

Your segment publishes on the next publish scheduled for the selected activation target.

Calculated Insights

The Calculated Insights feature lets you define and calculate multi-dimensional metrics from your entire digital state stored in Data Cloud.

Calculated Insights can be built Using Calculated Insights Builder, ANSI SQL, Salesforce Package, or Streaming Insights. Details on all options and use cases can be found in the Data Cloud Help Documentation. Also check Processing Calculated Insights for the Calculated Insights schedule.

For the "Personalization with CDP" use case, we created Calculated Insights to gain visibility across our Loyalty and Sales Order data. Examples of Calculated Insights are available in our Data Cloud Help Documentation and in our Data Cloud Salesforce GitHub Instance.

Once created, Calculated Insights are available in the Attribute Library. You can also confirm and validate Calculated Insights via Data Explorer.